People-centric approaches

to algorithmic explainability

THE CONTEXT

Providing transparency and explainability of artificial intelligence (AI) presents complex challenges across industry and society, raising questions around how to build confidence and empower people in their use of digital products. The purpose of this report is to contribute to cross-sector efforts to address these questions. It shares the key findings of a project conducted in 2021 between TTC Labs and Open Loop in collaboration with the Singapore Infocomm Media Development Authority (IMDA) and Personal Data Protection Commission (PDPC).

Through this project TTC Labs and Open Loop have developed a series of operational insights to bring greater transparency to AI-powered products and develop related public policy proposals.These learnings are intended both for policymakers and product makers – for those developing frameworks, principles and requirements at the government level and those building and evolving apps and websites driven by AI. By improving people’s understanding of AI, we can foster more trustworthiness in digital services.

![]()

Providing transparency and explainability of artificial intelligence (AI) presents complex challenges across industry and society, raising questions around how to build confidence and empower people in their use of digital products. The purpose of this report is to contribute to cross-sector efforts to address these questions. It shares the key findings of a project conducted in 2021 between TTC Labs and Open Loop in collaboration with the Singapore Infocomm Media Development Authority (IMDA) and Personal Data Protection Commission (PDPC).

Through this project TTC Labs and Open Loop have developed a series of operational insights to bring greater transparency to AI-powered products and develop related public policy proposals.These learnings are intended both for policymakers and product makers – for those developing frameworks, principles and requirements at the government level and those building and evolving apps and websites driven by AI. By improving people’s understanding of AI, we can foster more trustworthiness in digital services.

THE DESIGN JAM

Craigwalker led the project using a design jam as a bottom-up approach to co-create people-centric policy. We brought together emerging startups from around the world—such as Betterhalf.ai, Newsroom, MyAlice, and Zupervise—to explore how their solutions could improve AI explainability. Through the design jam, we connected cross-functional teams from the UK, Ireland, India, Indonesia, and Australia to collaboratively brainstorm and develop actionable ideas.

Craigwalker led the project using a design jam as a bottom-up approach to co-create people-centric policy. We brought together emerging startups from around the world—such as Betterhalf.ai, Newsroom, MyAlice, and Zupervise—to explore how their solutions could improve AI explainability. Through the design jam, we connected cross-functional teams from the UK, Ireland, India, Indonesia, and Australia to collaboratively brainstorm and develop actionable ideas.

THE SCOPE

My mission was to design and facilitate a workshop for the startup Zupervise, bringing together a diverse group that included C-level executives, a legal practitioner, product team members from Meta, and graduate students from the Lee Kuan Yew School of Public Policy (LKYSP).

THE PROCESS

We began by mapping Zupervise’s services and offerings, then deep-dived into their core challenges around AI trust and transparency. To guide the ideation process, we developed a user persona that helped participants stay focused on who they were designing for throughout the workshop.

Zupervise is a unified risk transparency platform My mission was to design and facilitate a workshop for the startup Zupervise, bringing together a diverse group that included C-level executives, a legal practitioner, product team members from Meta, and graduate students from the Lee Kuan Yew School of Public Policy (LKYSP).

THE PROCESS

We began by mapping Zupervise’s services and offerings, then deep-dived into their core challenges around AI trust and transparency. To guide the ideation process, we developed a user persona that helped participants stay focused on who they were designing for throughout the workshop.

for AI deployments in the regulated enterprise.

Zupervise platform offers a knowledge graph for AI-Risk intelligence,

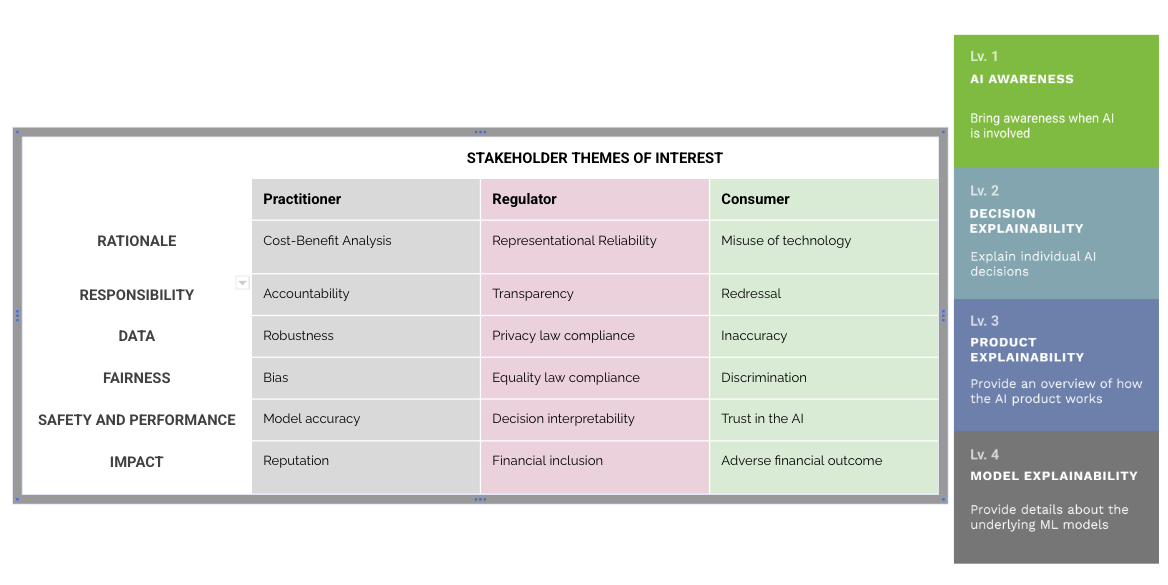

a risk observability capability and an out-of-the-box early warning system to assess, mitigate & report on AI-Risks. Imagine the product a Visionary Risk Office Leader when the business leverages machine-learning algorithms, he want to institute an explicit process for validating and approving the design, development & deployment process so that the organisation is protected against a wide and growing range of Al risks. However he also need to meet the diverse needs of practitioners regulators and consumers.

a risk observability capability and an out-of-the-box early warning system to assess, mitigate & report on AI-Risks. Imagine the product a Visionary Risk Office Leader when the business leverages machine-learning algorithms, he want to institute an explicit process for validating and approving the design, development & deployment process so that the organisation is protected against a wide and growing range of Al risks. However he also need to meet the diverse needs of practitioners regulators and consumers.

We conduct virtual brainstorming, there is a lot of common theme around how we can translate complex datasets from the backend into a digestible format that supports the various needs of internal stakeholders.

We select a winning idea! a visually intuitive, dynamic and customizable report dashboard that filters balanced information to different internal stakeholders before the deployment of the new AI model.

![]()

The solution increases information digestion and internal audit.

The prototypes co-created for this project are used to explore different aspects of the insights and considerations from product and policy perspective. These insights have been developed to guide product makers in their thinking around the design of AI explainability experiences. They do not constitute step-by-step instructions, but offer a range of considerations to help identify and prioritize explainability needs, objectives and solutions in particular product contexts.

Both startups and established companies can draw on these insights to create explainability mechanisms for new and existing products and features. They can also be used in the assessment of existing explainability experiences. In all instances, they are intended to help product makers cultivate greater understanding of their AI-powered products, particularly among general product users.

We select a winning idea! a visually intuitive, dynamic and customizable report dashboard that filters balanced information to different internal stakeholders before the deployment of the new AI model.

The solution increases information digestion and internal audit.

- Intuitive, Most reports will be presented visually (e.g. flowcharts, illustrations) with simplified wording and an explanation of why or how it works. It showcases a policy/regulatory section with a glossary for better understanding.

- Dynamic, Interconnecting the data in the backend with a simple front end providing better progress tracking and decision transparency in the company

- Customized, the report filtering different information that is relevant to different audiences and customizable by Ricardo to filter information

The prototypes co-created for this project are used to explore different aspects of the insights and considerations from product and policy perspective. These insights have been developed to guide product makers in their thinking around the design of AI explainability experiences. They do not constitute step-by-step instructions, but offer a range of considerations to help identify and prioritize explainability needs, objectives and solutions in particular product contexts.

Both startups and established companies can draw on these insights to create explainability mechanisms for new and existing products and features. They can also be used in the assessment of existing explainability experiences. In all instances, they are intended to help product makers cultivate greater understanding of their AI-powered products, particularly among general product users.

THE LEARNING

Product to policy

The findings of this project point to the value of ongoing research into the ways product makers can most effectively enable people’s understanding of AI systems and processes.Through cross-sector collaboration, product makers and policymakers can undertake further research into explainability techniques and explore methods for assessing the degree to which people comprehend AI systems.Together with this report, ongoing research can help policymakers facilitate the creation of people-centric explainability and trustworthy AI experiences. In consultation with industry, they can improve the impact of these policies by incorporating strategies that make them more actionable for product makers.

The findings of this project point to the value of ongoing research into the ways product makers can most effectively enable people’s understanding of AI systems and processes.Through cross-sector collaboration, product makers and policymakers can undertake further research into explainability techniques and explore methods for assessing the degree to which people comprehend AI systems.Together with this report, ongoing research can help policymakers facilitate the creation of people-centric explainability and trustworthy AI experiences. In consultation with industry, they can improve the impact of these policies by incorporating strategies that make them more actionable for product makers.

Pen and Paper, Show and Screenshot!

Paper and pen encourage rapid ideation and remove technical barriers, making the process more inclusive for everyone, regardless of design experience. It levels the playing field, shifting focus from visual perfection to the strength of the idea itself. This hands-on approach sparks spontaneous creativity, promotes quick iteration, and keeps the conversation anchored in the user’s needs. By stripping back the tools, we created space for deeper thinking, clearer communication, and more human-centered outcomes

screenshots of the sketches

CATEGORY

Product Policy

SCOPE

Workshop Facilitation

UX Designer

DURATION

3 month

LOCATION

Worldwide

TEAM

Facilitator

Larasati (Lead)

Clarissa

Zupervise

Mahesh

Janhvi Pradhan Deshmukh

Beyond Reach UK

Trish

LKYSPP

Dylan

TTC Labs & Meta

Peter

Chavez Procope

UC Davis

Tom Maiorana

Information Office, UK

Abigail Hackston

2020 © Meta TTC Lab